Migrate to Atlas

In this article, I'm going to explain one of the multiple ways you can migrate your on-prem cluster to MongoDB Atlas.

In this scenario, we will migrate a Sharded Cluster on-prem to a Sharded Cluster on Atlas with a topology change (3 shards on-prem to 1 shard in Atlas)

Create your cluster in Atlas

The first step is to create a cluster in Atlas. I will assume that you already have an Atlas account with cluster creation permissions.

Once we are in the project in Atlas, we will click on the "Create" button on the top right and then we will see a panel where we can define the options for the migration.

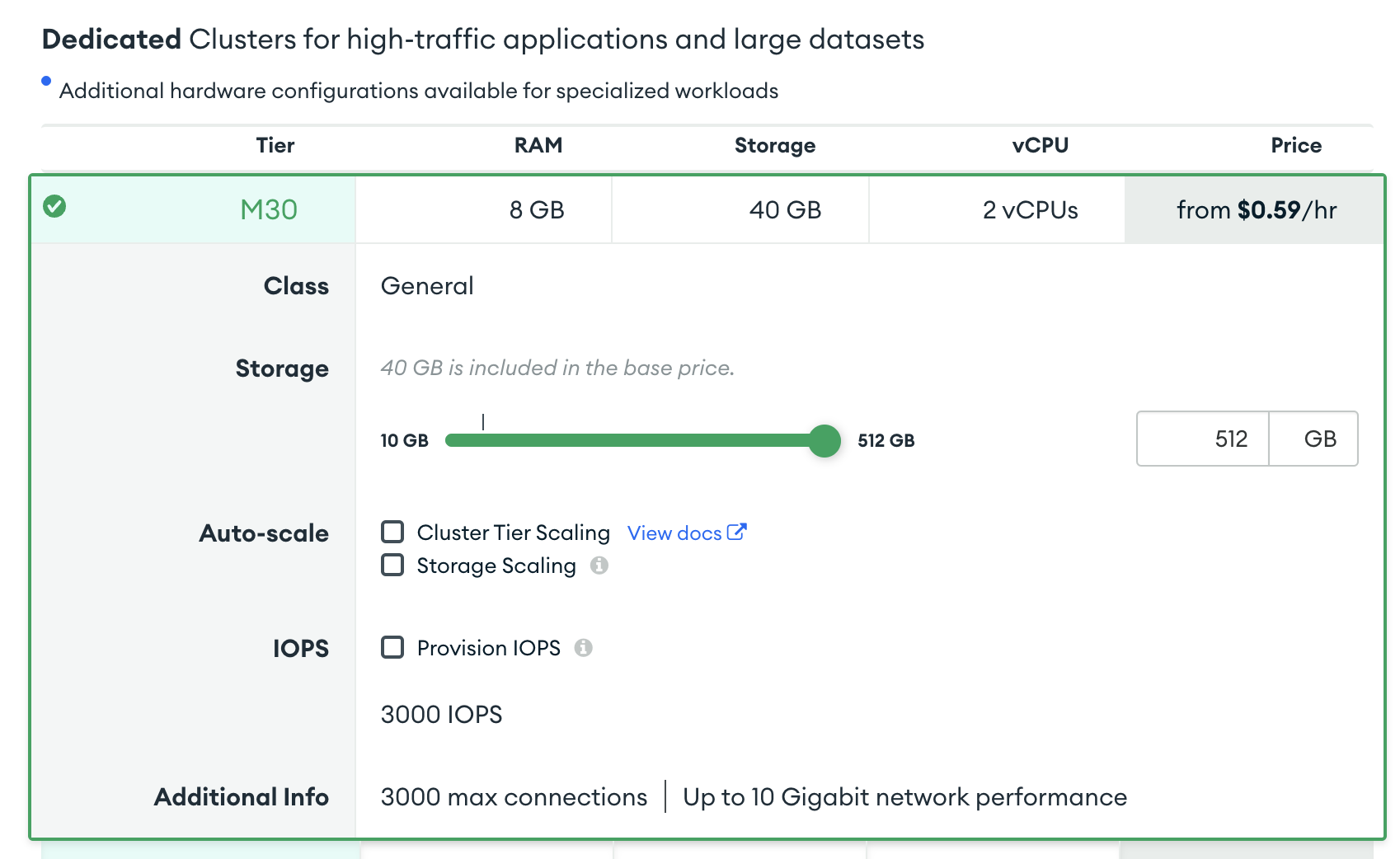

We will use the region that is nearest to our on-premise location to reduce latency. Remember to create an M30 (as it is the minimum to enable sharding) and disable auto-scaling (to avoid auto-scaling during a long migration).

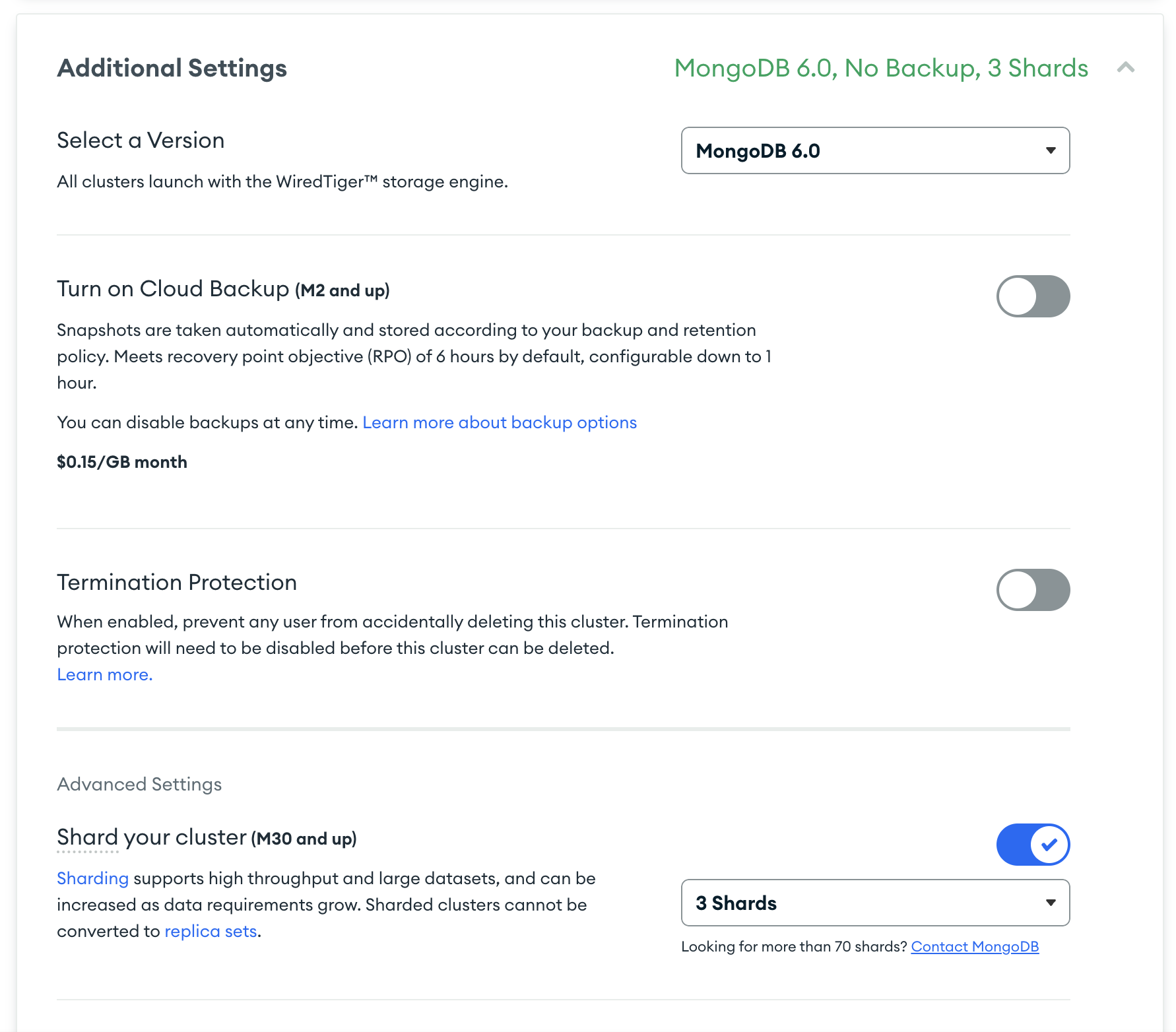

Also don't forget to enable sharding:

Install mongosync

Mongosync is a tool developed by MongoDB that will help us to migrate all the data from the source cluster to the destination cluster. More information about mongosync can be found here.

I recommend you download the tool from the MongoDB site here but you can also download it from the following links (1.7.3 and 1.8.0):

wget https://fastdl.mongodb.org/tools/mongosync/mongosync-amazon2-x86_64-1.7.3.tgz

wget https://fastdl.mongodb.org/tools/mongosync/mongosync-amazon2-x86_64-1.8.0.tgz

Other versions of mongosync 1.9.0 can be found here:

https://fastdl.mongodb.org/tools/mongosync/mongosync-amazon2-x86_64-1.9.0.tgz

https://fastdl.mongodb.org/tools/mongosync/mongosync-macos-arm-arm64-1.9.0.zip

https://fastdl.mongodb.org/tools/mongosync/mongosync-macos-x86_64-1.9.0.zip

https://fastdl.mongodb.org/tools/mongosync/mongosync-rhel70-x86_64-1.9.0.tgz

https://fastdl.mongodb.org/tools/mongosync/mongosync-rhel80-x86_64-1.9.0.tgz

https://fastdl.mongodb.org/tools/mongosync/mongosync-ubuntu1804-x86_64-1.9.0.tgz

https://fastdl.mongodb.org/tools/mongosync/mongosync-ubuntu2004-x86_64-1.9.0.tgz

You might want to install mongosh, you can copy and paste this command to install mongosh (from MongoDB 7.0) in an Amazon 2 Linux VM (procedure:

echo "[mongodb-enterprise-7.0]

name=MongoDB Enterprise Repository

baseurl=https://repo.mongodb.com/yum/amazon/2/mongodb-enterprise/7.0/\$basearch/

gpgcheck=1

enabled=1

gpgkey=https://pgp.mongodb.com/server-7.0.asc" | sudo tee /etc/yum.repos.d/mongodb-enterprise-7.0.repo

sudo yum install -y mongodb-mongosh

The mongosync.conf file could look like this:

cluster0: "mongodb://username:password@127.0.0.1:27028"

cluster1: "mongodb+srv://username:password@cluster0.zq2pr.mongodb.net/?retryWrites=true&w=majority&appName=Cluster0"

logPath: "/home/ec2-user/mongosync"

verbosity: "DEBUG"

loadLevel: 4

Here we explain the different options:

- cluster0 is the source cluster

- cluster1 is the destination cluster

- logPath is the path where the mongosync logs will be written

- verbosity is the level of detail written into the logs

- loadLevel is how fast the load will be

./mongosync-amazon2-x86_64-1.7.3/bin/mongosync --config mongosync.conf

Initiate the migration

First, we check the progress:

curl localhost:27182/api/v1/progress -XGET | jq

Let's check if the state is IDLE.

{

"progress": {

"state": "IDLE",

"canCommit": false,

"canWrite": false,

"info": null,

"lagTimeSeconds": null,

"collectionCopy": null,

"directionMapping": null,

"mongosyncID": "coordinator",

"coordinatorID": ""

}

}

Now we can start the migration:

curl localhost:27182/api/v1/start -XPOST \

--data '

{

"source": "cluster0",

"destination": "cluster1"

} ' | jq

We should see the following message

{

"success": true

}

Once the migration has started we can check the status, pause or resume with these commands:

curl localhost:27182/api/v1/progress -XGET | jq

curl localhost:27182/api/v1/pause -XPOST --data '{ }' | jq

curl localhost:27182/api/v1/resume -XPOST --data '{ }' | jq

Commit the migration

Once the progress shows canCommit : true, then we can run the following command to commit the migration.

curl localhost:27182/api/v1/commit -XPOST --data '{ }'

Remember that if you want to validate the data that you have migrated, you can use the migration verifier BEFORE you run the commit and AFTER the collection copy PHASE. There is more information below about the migration verifier.

Filtered namespace

If we want to sync only a specific namespace, we can do that with mongosync

The only thing to change is the call to the endpoint generated by mongosync

curl -X POST "http://localhost:27182/api/v1/start" --data \

' {

"source": "cluster0",

"destination": "cluster1",

"includeNamespaces": [

{

"database": "migrate",

"collections" : ["mycollection"]

}

]

} ' | jq

Alternatively, you can use a regular expression to indicate the namespaces, in this case, we are migrating the database named "migrate" and all the collections that start with "people_2", that is from "people_20" to "people_29"

"includeNamespaces": [

{

"database": "migrate",

"collectionsRegex": {

"pattern": "^people_2[0-9]"

}

}

],

And finally, you can also exclude namespaces

"excludeNamespaces": [

{

"database": "simrunner",

"collections": [

"sharded_25"

]

}

]

Reversible sync

In case after the cutover you need the ability to point your application back to the source cluster, mongosync gives you the ability to do this, this is the reversible sync. This is unavailable if you change the topology while migrating or the major versions don't match.

To do that, you need to start mongosync with a couple of options (reversible and enableUserWriteBlocking):

curl -X POST "http://localhost:27182/api/v1/start" --data '

{

"source": "cluster0",

"destination": "cluster1",

"reversible" : true,

"enableUserWriteBlocking" : true

} ' | jq

Verify your migration

It is important to verify the migration that you have done, and you have multiple ways of doing it:

- Document Counts

- Hash Comparison: you can verify sync by comparing MD5 hashes of collections synced from the source cluster to the destination cluster.

db.runCommand({

dbHash: 1,

collections: [

"accounts.us_accounts",

"accounts.eu_accounts",

...

]}).md5

- Document Comparison: sync by comparing documents on the source and destination clusters. In another post, I will show a possible implementation of this script.

- Migration Verifier: this is the option that we will use today.

These are the steps to download the public repository and compile the code.

git clone https://github.com/mongodb-labs/migration-verifier

cd migration-verifier

go build main/migration_verifier.go

Then, we can create a config file to store the options and connection strings

srcURI: "mongodb://username:password@127.0.0.1:27028"

dstURI: "mongodb+srv://username:password@cluster0.zq2pr.mongodb.net/?retryWrites=true&w=majority&appName=Cluster0"

metaURI: "mongodb+srv://username:password@cluster0.zq2pr.mongodb.net/?retryWrites=true&w=majority&appName=Cluster0"

serverPort: 28001

metaDBName: mongoverifierdb

The final steps is to execute the migration verifier:

./migration_verifier --configFile migrationverifierconfig.conf

Other options available are:

- --clean -> this will remove the metadata database and will start from 0. It will not delete source and destination data.

- --verifyAll -> to verify all the namespaces

- --srcNamespace -> to verify only one namespace at source

- --dstNamespace -> to verify only one namespace at destination

- --numWorkers -> to increase the number of threads used to compare the data

Before the COMMITTED phase by mongosync (and AFTER the collection copy), you can start the verification process by calling this endpoint:

curl -H "Content-Type: application/json" -X POST -d '{}' http://127.0.0.1:28001/api/v1/check | jq

At a minimum, you want a first pass to happen before stopping the verifier. When you are happy with the results, you can stop the verifier process by running this command:

curl -H "Content-Type: application/json" -X POST -d '{}' http://127.0.0.1:28001/api/v1/writesOff | jq

Now you are ready to commit the migration in mongosync.

You can check the status by calling this endpoint:

curl -H "Content-Type: application/json" -X GET http://127.0.0.1:28001/api/v1/progress | jq

Common errors

Error 1 (using mongosync 1.7.3)

{

"success": false,

"error": "ClusterValidationError",

"errorDescription": "source and destination version compatibility check: server FCV check for the source: could not decode the featureCompatibilityVersion response: (Unauthorized) not authorized on admin to execute command { find: \"system.version\", filter: { _id: \"featureCompatibilityVersion\" }, limit: 1, singleBatch: true, readConcern: { level: \"majority\" }, lsid: { id: UUID(\"a561d032-110a-453e-9e4f-79e2d0f9f4ec\") }, $clusterTime: { clusterTime: Timestamp(1726123821, 1), signature: { hash: BinData(0, AF0218CE4BA94EF8E88A8DB583D7D1FDB6A19A77), keyId: 7413361922264793112 } }, maxTimeMS: 300000, $db: \"admin\" }"

}

Mongosync user needs permission to check the FCV (Feature Compatibility Version) of MongoDB. This can be fixed by adding the correct permissions to the user that is used to connect to the source cluster.

| Sync Type | Required Source Permissions | Required Destination Permissions |

|---|---|---|

| Default | backup | clusterManager |

| Default | clusterMonitor | clusterMonitor |

| Default | readAnyDatabase | readWriteAnyDatabase |

| Default | restore |

Change the permissions and restart mongosync

Error 2 (using mongosync 1.7.3)

If the source version you are using is not supported, you should receive a message like the following:

{

"success": false,

"error": "ClusterValidationError",

"errorDescription": "source: version 5.0.28 is unsupported"

}

In this case, contact MongoDB Professional Services so they will be able to help you.

Error 3 (using mongosync 1.7.3)

If you are migrating from a sharded cluster to a sharded cluster, the balancer should be disabled both at source and destination.

{

"success": false,

"error": "APIError",

"errorDescription": "The destination cluster's balancer is turned on. Please ensure that (a) the source and destination cluster balancers are both turned off by running balancerStop on both clusters and waiting for the command to complete, and that (b) no moveChunk or moveRange operations are occurring on either the source or destination cluster. Then, delete all migrated data on the destination cluster and start a new migration. There must be no chunk migration activity on either the source or destination cluster for the entire lifetime of the migration."

}

This is a fatal error that will stop mongosync.

The destination cluster's balancer is turned on. Please ensure that (a) the source and destination cluster balancers are both turned off by running balancerStop on both clusters and waiting for the command to complete, and that (b) no moveChunk or moveRange operations are occurring on either the source or destination cluster. Then, delete all migrated data on the destination cluster and start a new migration. There must be no chunk migration activity on either the source or destination cluster for the entire lifetime of the migration.

{"time":"2024-09-12T07:45:45.970381Z","level":"error","serverID":"6ee33405","stack":[{"func":"EnsureBalancerIsOff","line":"391","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/mongosync/util/sharding/sharding.go"},{"func":"EnsureAllBalancersAreOffIfClustersAreSharded","line":"352","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/mongosync/util/sharding/sharding.go"},{"func":"(*Mongosync).Start","line":"1107","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/mongosync/mongosync.go"},{"func":"(*WebServer).startHandler","line":"323","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/webserver/server.go"},{"func":"(*WebServer).wrapHandler.func1","line":"151","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/webserver/server.go"},{"func":"(*Context).Next","line":"174","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/context.go"},{"func":"(*WebServer).operationalAPILockMiddleware","line":"164","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/webserver/server.go"},{"func":"(*Context).Next","line":"174","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/context.go"},{"func":"CustomRecoveryWithWriter.func1","line":"102","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/recovery.go"},{"func":"(*Context).Next","line":"174","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/context.go"},{"func":"(*WebServer).RequestAndResponseLogger.func1","line":"64","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/internal/webserver/request_and_response.go"},{"func":"(*Context).Next","line":"174","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/context.go"},{"func":"(*Engine).handleHTTPRequest","line":"620","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/gin.go"},{"func":"(*Engine).ServeHTTP","line":"576","source":"/data/mci/7741a13740fe75c9cca29f39d7dbd761/src/github.com/10gen/mongosync/vendor/github.com/gin-gonic/gin/gin.go"},{"func":"serverHandler.ServeHTTP","line":"2936","source":"/opt/golang/go1.20/src/net/http/server.go"},{"func":"(*conn).serve","line":"1995","source":"/opt/golang/go1.20/src/net/http/server.go"},{"func":"goexit","line":"1598","source":"/opt/golang/go1.20/src/runtime/asm_amd64.s"}],"error":"The destination cluster's balancer is turned on. Please ensure that (a) the source and destination cluster balancers are both turned off by running balancerStop on both clusters and waiting for the command to complete, and that (b) no moveChunk or moveRange operations are occurring on either the source or destination cluster. Then, delete all migrated data on the destination cluster and start a new migration. There must be no chunk migration activity on either the source or destination cluster for the entire lifetime of the migration.","message":"Mongosync errored while starting."}

You can do that by running this command:

db.adminCommand( { balancerStop: 1})

db.adminCommand( { balancerStatus: 1})

Error 4 (using mongosync 1.7.3)

In case of seeing an error like this one:

{

"success": false,

"error": "InvalidRsToScOptions",

"errorDescription": "Must provide `sharding` option for Replica Set to Sharded Cluster migration"

}

The problem is you are migrating from a Replica Set to a Sharded Cluster and need to send the parameter sharding sharding field

{

"source": "cluster0",

"destination": "cluster1",

"sharding": {

"createSupportingIndexes": true,

"shardingEntries": [

{

"database": "accounts",

"collection": "us_east",

"shardCollection": {

"key": [

{ "location": 1 },

{ "region": 1 },

]

}

}

]

}

}

Error 5 (using mongosync 1.9)

{"time":"2025-01-14T09:42:56.050868Z","level":"fatal","serverID":"beb74c4c","mongosyncID":"coordinator","stack":[{"func":"Wrapf","line":"258","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/labelederror/labelederror_constructors.go"},{"func":"Wrap","line":"238","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/labelederror/labelederror_constructors.go"},{"func":"(*Mongosync).runChangeEventApplicationPhase","line":"387","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/mongosync/mongosync_replicate.go"},{"func":"(*Mongosync).runPhase","line":"160","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/mongosync/mongosync_replicate.go"},{"func":"(*Mongosync).runFromCurrentPhase","line":"89","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/mongosync/mongosync_replicate.go"},{"func":"(*Mongosync).Replicate","line":"73","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/mongosync/mongosync_replicate.go"},{"func":"(*Mongosync).launchReplicationGoroutines.func1","line":"956","source":"/data/mci/3da273a495841ece4c5c2e9c9c892bcc/src/github.com/10gen/mongosync/internal/mongosync/mongosync.go"},{"func":"goexit","line":"1695","source":"/opt/golang/go1.22/src/runtime/asm_amd64.s"}],"error":{"msErrorLabels":["unclassifiedError"],"clientType":"source","operationDescription":"Change Stream Reader (DDL) is processing change stream.","message":"change event application failed: `Change Stream Reader (DDL)` failed: failed to iterate change stream: Change Stream Reader (DDL) could not retrieve the next change event using last resume token \"8267863110000051A52B0429296E1404\" (timestamp = {T:1736847632 I:20901}): (ChangeStreamHistoryLost) Error on remote shard atlas-tjp1t9-shard-01-02.kl1yf.mongodb.net:27017 :: caused by :: Executor error during getMore :: caused by :: Resume of change stream was not possible, as the resume point may no longer be in the oplog."},"message":"Got an error during replication."}

This error happens when the oplog at source is not enough. In this occasion, mongosync was syncing data (collection copy phase) while there were lots of inserts at source. As the oplog was not large enough, the oplog rolled off and the entry in the oplog from when the collection copy started (before the CEA phase) was no longer there.

Remember: The mongosync program uses change streams to synchronize data between source and destination clusters. mongosync does not access the oplog directly, but when a change stream returns events from the past, the events must be within the oplog time range.

To avoid this issue, increase the size of the oplog, you can find more info in this link: Oplog sizing. The instructions are also below

Monitor oplog Size Needed for Initial Sync

- Determine oplog Window: To get the difference in seconds between the first and last entry in the oplog run db.getReplicationInfo(). If you are replicating a sharded cluster, run the command on each shard.

db.getReplicationInfo().timeDiff

The value returned is the minimum oplog window of the cluster. If there are multiple shards, the smallest number is the minimum oplog window.

- Determine mongosync Replication Lag: To get the lagTimeSeconds value, run the /progress command. The lag time is the time in seconds between the last event applied by mongosync and time of the current latest event on the source cluster.

It is a measure of how far behind the source cluster mongosync is.

- Validate oplog Size: If the lag time approaches the minimum oplog window, make one of the following changes:

-

Increase the oplog window. Use replSetResizeOplog to set minRetentionHours greater than the current oplog window.

-

Scale up the mongosync instance. Add CPU or memory to scale up the mongosync node so that it has a higher copy rate.

Links

- More information about mongosync behaviour here.

- Different states of mongosync.

Important note

The intention of this blog entry is purely informative and I am not responsible for any loss that you incur by following it. Please, always remember to test this in lower environments and in case of a critical workload, I recommend contacting MongoDB Professional Services where we will be delighted to help you.