Embedding graphical representation

We have spoken about what is an embedding and also how we can store them in MongoDB using Atlas Search and the importance of keeping them updated. You can read more about that in the solution I prepared for MongoDB in their Solutions Library

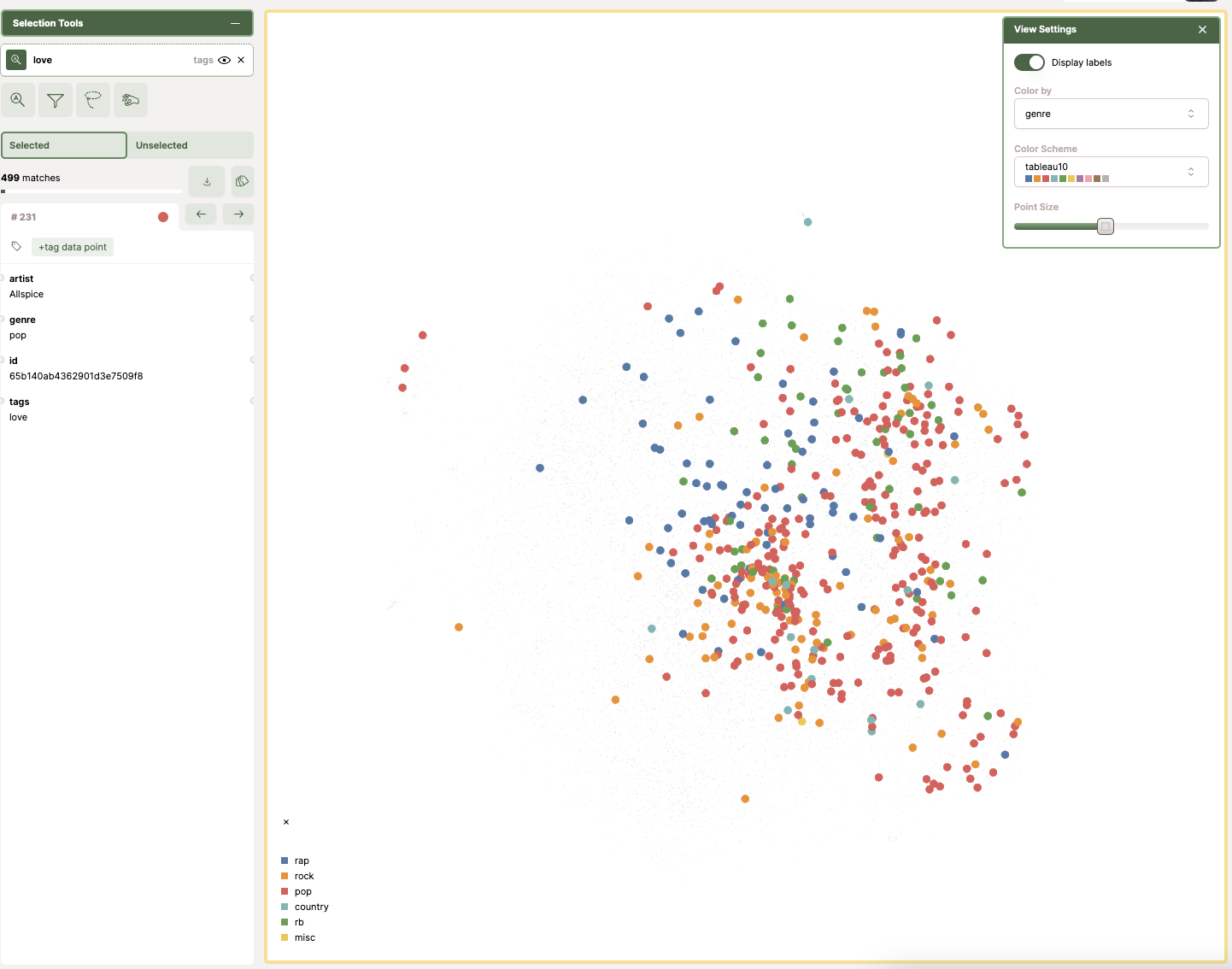

Now that we know how to extract the semantics of your data, and represent them in embeddings, it comes to the stage where we want to represent that data visually so we are able to understand it and gather relationships.

There are multiple ways of doing so and one of them is with Nomic AI solution called Atlas (not to confuse with MongoDB Atlas which is MongoDB managed service DBaaS)

By the way, if you want to run an LLM model locally without having to pay for a third party, there is a friendly solution provided by Nomic.AI that will use the GPU of your computer, this is particularly nice if you are using a modern Mac with an M processor. This is a multi-os desktop application that only connects to the internet to download the model. This is the link GPT4All

This repository provides a detailed walkthrough on how to represent embeddings graphically.

Prepare Nomic installation:

The first step is to get a token from Nomic

pip install nomic

nomic login [token]

Script:

from pymongo import MongoClient

from nomic import atlas

from pandas import DataFrame

from numbers import pad

import numpy as np

# Requires the PyMongo package.

# https://api.mongodb.com/python/current

client = MongoClient('')

cursor = client['streamingvectors']['lyrics'].aggregate([

{

'$match': {

'lyrics_embeddings_en': {

'$exists': True

}

}

}, {

'$addFields': {

'id': {

'$toString': '$_id'

}

}

}, {

'$project': {

'_id': 0,

'id': 1,

'lyrics_embeddings_en': 1,

'artist': 1,

'tags': {'$first': '$tags'},

'genre' : 1

}

}, {

'$limit': 20000

}

])

list_cur = list(cursor)

#Convert the list of embeddings to a numpy array

embeddings_lst = []

for doc in list_cur:

embeddings_lst.append(doc['lyrics_embeddings_en'])

np_numpy_embeddings = np.array(embeddings_lst)

#Create a DataFrame from cursor

df = DataFrame(list_cur, columns=['artist', 'tags', 'genre', 'id'])

df_refined= df.astype({'artist': 'string', 'tags': 'string', 'genre': 'string', 'id': 'string'})

dataset = atlas.map_data(data=df_refined, embeddings=np_numpy_embeddings, id_field='id', identifier="lyrics_dataset")

# colorable_fields=['label'],

#https://www.moschetti.org/rants/mongopandas.html

Execute the script:

python3 upload_nomic.py

Once you have executed the script, it will read all the lyrics in English and extract from the list of tags the first element and also the embeddings.

Then, it loads everything into a DataFrame and uploads it into Nomic Atlas.

There, you can explore the data set and filter by terms. If we search by songs that talk about love, we will see all the dots (each dot represents a song and the colour a genre), and we can start our data analysis visually. In this case, we can see most of the songs that talk about love are "pop" songs.